Revolutionizing Cloud Performance with NPDI

Key Advantages of NPDI

Bare Metal

KVM/libvirt/ Virtio-Net

Microsoft Azure

Kubernetes

AWS

Ideal Applications for NPDI

NPDI is perfectly suited for high-performance environments, including:

- In-Memory Databases: Optimizes applications like Memcached for faster data access.

- Web-Caching & Load-Balancing: Streamlines network traffic management.

- Content Delivery Networks (CDNs): Enhances content distribution efficiency.

- HTTP Servers: Boosts web server response times.

- Message Brokers: Improves message-passing interfaces with low latency.

Overview

In the existing Linux applications like memcached, NginX, HAProxy or any other the IO is done using UNIX Socket API – a set of function calls that are implemented in LibC. LibC function does a system call (syscall) to give control to the Linux kernel that in turn copies data from the userland and does the actual work.

There are applications using low level APIs like DPDK, but despite DPDK existing for more than ten years most of applications are still not using it. It’s caused by integration complexity and no out of the box network stack support. Moreover, in most cases DPDK takes ownership of the ethernet adapter resulting into hard to manage configurations.

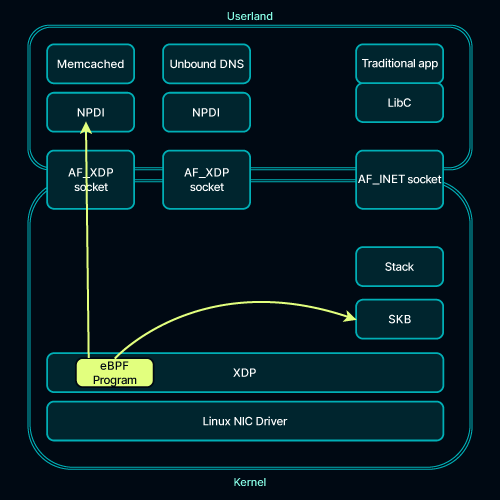

NPDI can run on top of any HW. This is achieved by using AF_XDP – a recently new feature of the Linux Kernel that allows to direct packets directly to the user application bypassing Linux kernel stack. Traffic that is coming from the network is filtered by an eBFP program and directed into correct application (see blue path on the diagram). Meanwhile non-accelerated traffic is delivered to Linux networking stack that is passing it to a non-accelerated application.

AF_XDP is part of the Linux kernel starting from 5.3 thus NPDI can leverage it on all recent Linux distributions.

It should be stressed that the system can work in virtualized environment, can work in systems like MS Azure, AWS and does not require a bare metal server.

NPDI can work in Kubernetes, can work over VirtIO-Net. It works practically everywhere.

The performance gain depends on the environment and the extend to which the system is bottlenecked on the network.

NPDI does not require:

- Any modifications to the installed kernel

- Recompilation of the application

- Reconfiguration of the system.

If you want to rollback – done instantly, you unregister our eBPF program, unload kernel module, stop using our system and switch back to the old ways. Safe and effortless.

Below are examples of real life applications and the gain you get when using NPDI.

Each application was executed in two configurations:

- Linux: no NPDI, i.e. the way it runs on a pure Linux system,

- NPDI: using our system, the fast way!

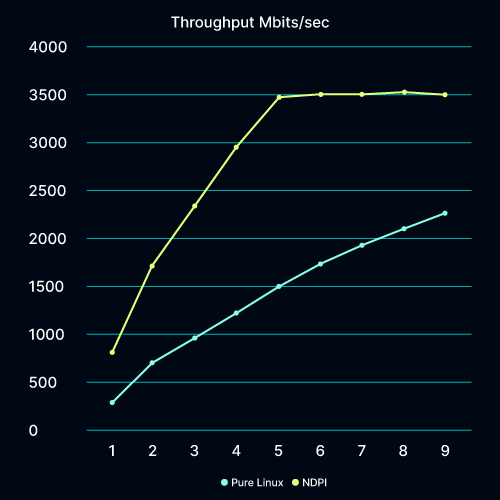

Running high performance proxy.

Nginx server is accelerated as well.

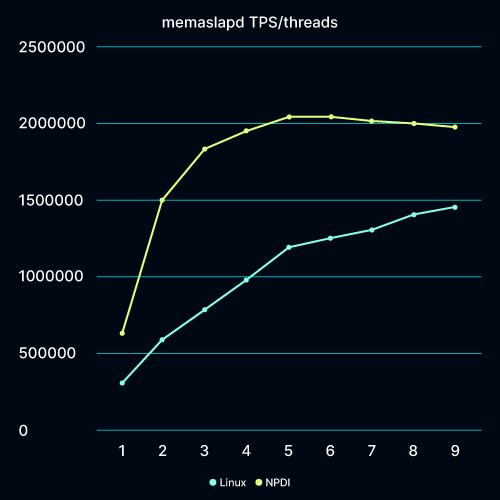

Memcached uses a different Linux mechanism to scale across multiple CPUs. Tests are running it in NAPI mode. By default memcached assigns connections to worker threads in a round-robin manner. NAPI ID based worker thread selection enables worker threads to be selected based on the NIC HW RX queue on which the incoming request is received.

NGINX Server - 3 Cores with NPDI like 9 Cores

- 3 threads using NPDI are as fast as Linux will ever be on the system

Showing network rate: Test parameters: wrk_request_size=0, srv_file_size=1024, name=proxy

Test was running WRK2, doing HTTP GET on 1K buffer that is proxied by the accelerated Nginx proxy to the server serving the request.

| Proxy Workers | Linux (Mbits/sec) | NPDI (Mbits/sec) | NPDI gain |

| 1 | 346.450 | 804.216 | +132.13% |

| 2 | 669.411 | 1569.260 | +134.42% |

| 3 | 952.359 | 2244.120 | +135.64% |

| 4 | 1224.820 | 2924.350 | +138.76% |

| 5 | 1496.5300 | 3466.5900 | +131.64% |

| 6 | 1738.3700 | 3536.550 | +103.44% |

| 7 | 1945.9100 | 3524.560 | +81.13% |

| 8 | 2086.000 | 3585.040 | +71.86% |

| 9 | 2283.800 | 3471.880 | +52.02% |

Memcached – 2 Cores with NPDI like 9 without

- 2 threads NPDI is as fast as Linux will ever be;

- 3 cores blow Linux out of the water.

Showing network rate: mc_napi_ids=TRUE, bm_tool=memaslap, bm_data_size=32, bm_binary=FALSE

We’re measuring TPS – you can see that we saturate the testbench very fast.

| Threads | Linux | NPDI | Gain |

| 1 | 326212 | 641149 | +96.54% |

| 2 | 600495 | 1492840 | +148.60% |

| 3 | 794265 | 1878740 | +136.54% |

| 4 | 993351 | 1973400 | +98.66% |

| 5 | 1196730 | 2039490 | +70.42% |

| 6 | 1250530 | 2041980 | +63.29% |

| 7 | 1302530 | 2007620 | +54.13% |

| 8 | 1394360 | 1997100 | +43.23% |

| 9 | 1440900 | 1984800 | +37.75% |

So with just 3 cores you can replace 9+ core system and still get way better performance.

What about latency – it’s very important for memcached. Let’s compare 3 threads of NPDI with 9 threads of Linux:

- Linux: Avg=0.18197 ; p99.9=0.519

- NPDI: Avg=0.14026 ; p99.1=0.344